Recently Large Language Models got a lot of attention. Some results are quite impressive but I find the hype around it quite unjustified. Probably a large portion comes from companies trying to secure funding for the quite money-intense R&D, training and running of those models or from freeloaders. But since they are available let’s try how they perform with medieval texts, handwriting and Latin.

What LLMs are

Probably I should first clarify what Large Language Models (LLMs) mean. Yes, I try to avoid the term AI for it. I’m a skeptic. Those are models capable of natural language processing and generation. They acquire this capability by ingesting a large corpus of text and learning its statistical relationships through machine learning. Fine-tuning, reinforcement learning and context is provided by prompt engineering. That is why all of those models come in the form of a chat interaction which also provides good usability. Examples of such models are the GPT family, Gemini, LLaMA or others. For testing I used both the free versions of GPT-4o and GPT-4o mini in ChatGPT as well as Microsoft’s Copilot.

Challenges with Charters in Latin

As example I used a short charter from the 13th century (1290) written in Latin. I wanted to transcribe it but I’m lacking formal education in Latin. So I had difficulties with the grammar. I know the general structure of the sentences, know some words, know what cases there are. Still, I lack the knowledge about inflection and other grammatical rules and also do not have the time to acquire it.

The problem with charters in Latin is that the vast majority of words is abbreviated. Scribes especially leave out word endings which carry the inflection information (-us in the simplest form). Sometimes they also use ligature for this. They mark abbreviations with super- or sub-scripted strokes. Often charters contain reoccurring legal phrases that are often abbreviated. You have to know those in order to make sense of them.

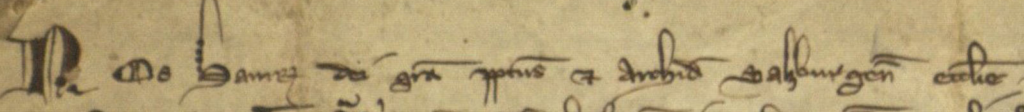

An example of abbreviated content is the starting line of the charter. It reads as „Nos Hainr dei gra pptus et archid Salzburgen ecclie“ (ligatures not included, see picture). Expanded this becomes „Nos Hainricus dei gratia praepositus et archidiaconus Salzburgensis ecclesie“. You see the problem. First you have to make sense of the handwriting. Then you have to know a lot of vocabulary to find the right match for the abbreviations. Finally, you have to be versed in grammar to get your transcription right.

LLMs to the rescue

I did the transcription but there were some words I couldn’t make sense of. I also needed help with the grammar. First, I asked about inflection of a specific verb in the context of a sentence. Since the results were good I quickly progressed to giving the model the transcription I had so far and diving into the details. It felt a bit like a conversation with someone knowing more Latin than myself. For example I had the fragment „Et up quo damus lram istam testeamus.“ which contained some abbreviations. The model responded

“Et up quo damus lram istam testeamus”: This seems to be a slightly garbled version of a standard legal phrase. The corrected phrase is “Et per hoc damus hanc lram istam testeamus,” which means “And by this we give this document, we attest.” Here, “lram” should be corrected to “litteram” (letter/document).

The resolving of „lram“ to „litteram“ was nice but here we also see that we have to be extremely careful when using those models because they tend to generate stuff like in the corrected phrase which is output. It contains words that are not represented in the original document. In the end I settled for the phrase „Super quo damus litteram istam testeamus“.

I got bolder and gave the model the image link because I knew that it should also be capable of image recognition. It responded with

Thank you for providing the document image link. After reviewing the image …

It told me it „reviewed“ the image. I find this attribution of anthropomorphic traits to the entity itself in the output a bit problematic. But here we are – it seems to be part of the usability. I think the image was not properly „reviewed“ because of what follows and because it still suggested „hanc“. Another thing to be careful about.

This went on for a while until I ended up with a transcription which captured the abbreviations as I thought. I then input the whole text and asked to carry out any grammatical corrections. You probably have to repeat this a few times as the model might find other mistakes after correcting some earlier ones. Here the model also suggested a change in „Capituli Salzburgensi“ but I did not see what was changed. It looked the same to me. So I asked about it and the model „admitted“ (see, how I keep using anthropomorphisations?) that it did not change anything but reported a change where there was none to be done. Again we need to be careful. The overall transcription then was

Nos Hainricus dei gratia praepositus et archidiaconus Salzburgensis ecclesie tenore praesentium recognoscimus et fatemur ego super scriptas tres hubas in Lungowe contulimus Engelramo et fratribus suis filiis Raimlim de Æenach iusto feodali tytulo vnâ cum heredibus eorum perpetuo possidendas praesertim cum huiusmodi hube ad nostram collationem et Capituli Salzburgensi pertineant pleno iure. Sunt autem nomina ipsarum hubarum vna in Purchstal iuxta Tæmswich et vna in Prukdorf et vnâ huba in Stranach in monte Chætzperge. Super quo damus litteram istam testeamus. Datum in Mouterdorf anno domini millesimo ducentisimo nonagesimo in crastino Sancti Nycolaii

I then asked the model to translate this to German to test its translation capabilities. The only other machine translation service I know being capable of Latin is the Google Translator which yields mixed results. The model translated „huba“ to „Höhle“ but mentioned I should revise this if it meant farm. I told it that it meant farm and it should revise. I also mentioned that „praepositus“ would translate to „Propst“ and that „archidiaconus“ should be either „Erzdiakon“ or „Erzpriester“. Earlier I input that „in crastino“ meant the day after the mentioned festive day. This are examples of providing context and reinforcement learning by prompt engineering. The result was

Wir, Heinrich durch Gottes Gnade Propst und Erzdiakon der Kirche von Salzburg, erkennen durch diese Urkunde an und bekennen, dass wir die drei Höfe in Lungowe Engelram und seinen Brüdern, den Söhnen Raimlim von Æenach, auf rechtmäßigen feudalen Titel zusammen mit ihren Erben zur ewigen Besitzausübung übergeben haben, insbesondere da solche Höfe zu unserem Patronat und dem des Salzburger Kapitels vollgültig gehören. Die Namen der besagten Höfe sind: einer in Purchstal bei Tæmswich, einer in Prukdorf und ein Hof in Stranach am Berg Chætzperge. Darüber geben wir dieses Dokument aus. Ausgestellt in Mouterdorf im Jahre des Herrn 1200 am Tag nach dem Fest des Heiligen Nikolaus.

This is a quite good translation (ok, it got the year wrong) and definitely better than what Google Translator is capable of in most cases.

Full automatic transcription?

So I got what I wanted. Next, I wanted to know if the model could transcribe the charter automatically for me. First, I went to Microsoft Copilot and gave it the image link but also a bit of context saying that it was a medieval charter from 1290 written in Latin. Copilot then responded that transcribing those documents is tricky because of handwriting and abbreviations. It told me I should visit Transkribus as they have a model specifically trained for handwriting. Fair enough. I knew Transkribus and followed its development for a few years already. More about that in a separate article.

Then I gave ChatGPT the image link and asked for transcription but this time I left out the context. It told me it cannot transcribe from a link but I could upload the image. So I did that. It did output the following

In dei nomine, amen. Nos fratres et universitas capituli ecclesie Salzburgensis recognoscimus, quod nobis, in presencia honorabilium virorum dominorum Heinrici de Tannhoven, et Rvdigeri de Hohenegge, et Wolframi de Hag, et aliorum plurimorum fide dignorum, Engelhardus de Stamech, miles, fideius forus constitutus est pro domino Federico de Hohenecke, milite, sub pena infrascripta. Acta sunt hec anno domini Mo. CCo. XCo. VIIIo. et die veneris post festum Sancti Andree apostoli.

This is a formal document likely from the ecclesiastical administration in Salzburg, dated to the year 1290 (as indicated by the year mentioned in Roman numerals). The language appears to be medieval Latin. The document records that a certain Engelhardus de Stamech, a knight, has become a surety for Lord Frederic de Hohenecke in the presence of several reputable men.

ChatGPT claims that it does not access data of other conversations (where I worked with it on the same charter) so it likely did analyse some parts of the image correctly. It got the year and the language right and that the Salzburg administration created it. The other thing you see is a completely different text. This is what is called a „hallucination“, something that fits the context and looks sound but is wrong. That is why it is dangerous to naively use the output of those models.

I told it „The roman numerals you did output resolve to the year 1298 and the text does not resemble the content of the image“. So it did try again and the result was

Nos H. dei gratia preposius et S. subdecanus Salczburgensis ecclesie, necnon et fratres nostri universi capituli eiusdem uniuersitati uestre B . . ., uiri . . . de Einchenstat, vestrum generale responsum super quibusdam litteris venerabilis patris nostri C. electi Salzburgensis nuper ad . . . de Brikcilis directis . . .

It did start out largely correctly but then it deteriorated quickly and the rest is garbage. I did not think that the model would be capable of a task that poses a challenge for humans but I wanted to try out the boundaries of what is possible.

Summary

For my use case of fixing the Latin grammar of a transcribed charter and for finding expansions for abbreviations in it I was quite successful. It took a bit of work and iterative refinement but having „help“ available shifted the process from challenging to achievable. For this I’m quite satisfied with the capabilities of LLMs. The problem is that it quickly feels like a conversation with a real individual so you are quick to project common human traits onto the machine. But it is unjustified to naively use the model as the problems of hallucination and others described above show. If used as a tool in the right way it can be helpful, if used naively and blindly it can be dangerous – depending on the area of use.