I digitized a few books for the Municipal Archive of Gmünd (Stadtarchiv Gmünd in Kärnten) which are of local interest but were out of print with few copies in libraries or privately owned. Here I describe the process of how I did that.

As a disclaimer I have to add that you have to respect the author’s rights. It is not allowed to just scan a book and make it publicly available. You either have to get permission or the author’s rights have to be expired. In the European Union this happens with the 1st of January following 70 years after the death of the author. Then the book enters the public domain.

Also libraries and other institutions have their own digitization programs where already a large number of works have been made available online. Those institutions also often have better (i.e. more expensive) technology to do this at a larger scale. Those programs have their own way of prioritizing which books to digitize. They often focus on high impact works or out of preservation reasons on fragile works or those that get accessed often. This also means that works of low impact or of only local importance usually never enter those programs.

But there are also digitization-on-demand services emerging. They offer to digitize public domain books for a fee. After a while such books are also available to the public. One example for such a service is eod books.

Getting the book

The municipal archive has their own library. Otherwise my go-to libraries are university libraries. They also often have book scanners available. I have used the scanners at the Vienna University Library and the University Library of Klagenfurt (Zeutschel zeta). Book scanners are built to digitize books or a range of pages without putting too much strain on the spine. Especially for fragile works you could damage them if you put them on a flatbed scanner and pressing the pages against the glass. The scanners also aid you with post-processing such as de-skew, cropping and de-warping but often with mixed results.

Scanning the book

One of the most difficult to correct problems you can introduce during scanning are warped lines. They not only do not look great also optical character recognition (OCR) produces less reliable results. Warping occurs if the page is not perpendicular to the sensor. Since a book scanner uses an overhead light approach the binding can cause the pages to bend when the book is lying flat on a surface. Some scanners use book cradles to minimize the problem and also have de-warping software built in. The book scanners I was using had mixed results with de-warping. If you want to avoid warped lines at all cost you have to either straighten the pages somehow (using clamps) or use a V-shaped setup with glass plates – or you have to use a flatbed scanner.

De-skewing (rotating) to produce flat text lines is normally no problem for most post-processing software. Some also offer de-warping, but the software I tried (mostly open-source) had mixed results on that. There are different de-warping algorithms. The more advanced of them use machine learning techniques. Unfortunately, I did not find an easy to use solution. There are a lot of research papers but not much usable open-source software. Also the machine learning approaches often require a training dataset which I do not have.

So whenever the book allows I resort to a flatbed scanner to avoid warped lines as I do not have access to the more capable and more expensive book scanners (yes, you can build this yourself, but for me this is out of scope).

Post-processing

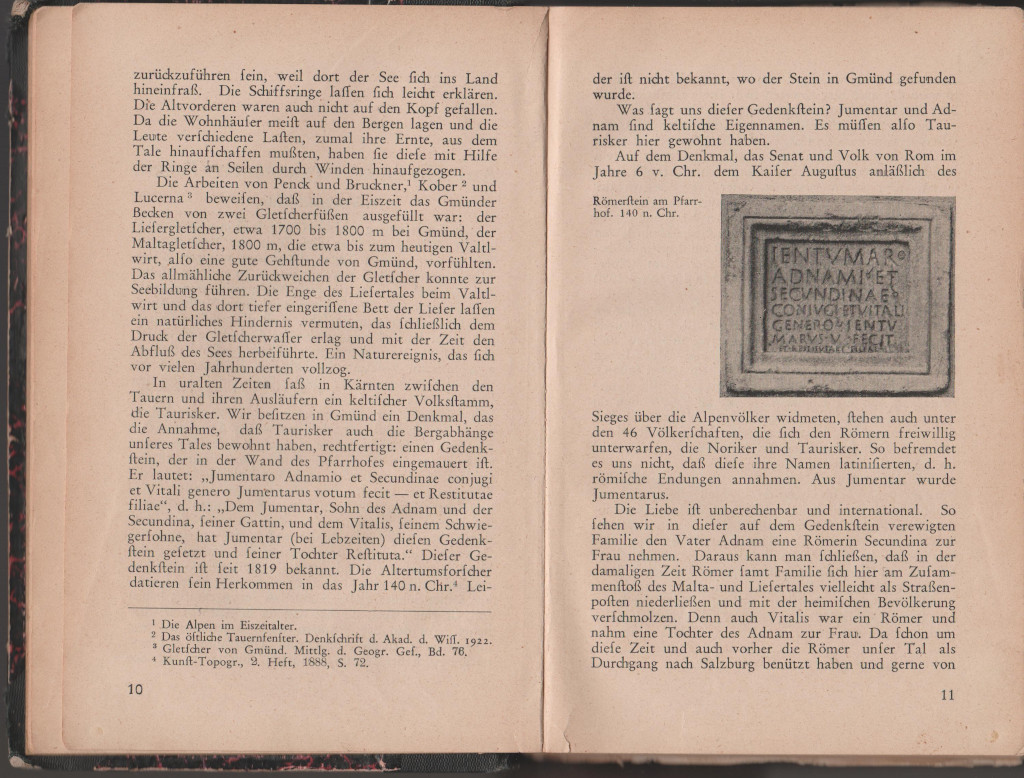

Based on your settings you end up with a color, grayscale or black-white (not recommended, it’s easy to leave something out later on but you cannot add what is not in the original data) image of the book page(s). In the case of the example here the book was small (format A5) so it was possible to scan two pages at once which reduces scan time. On the downside you have two pages next to each other but in the resulting document we want to have each page on its own. Additionally, we have a lot of things on the image which we do not want such as the jacket. So we have to post-process the images.

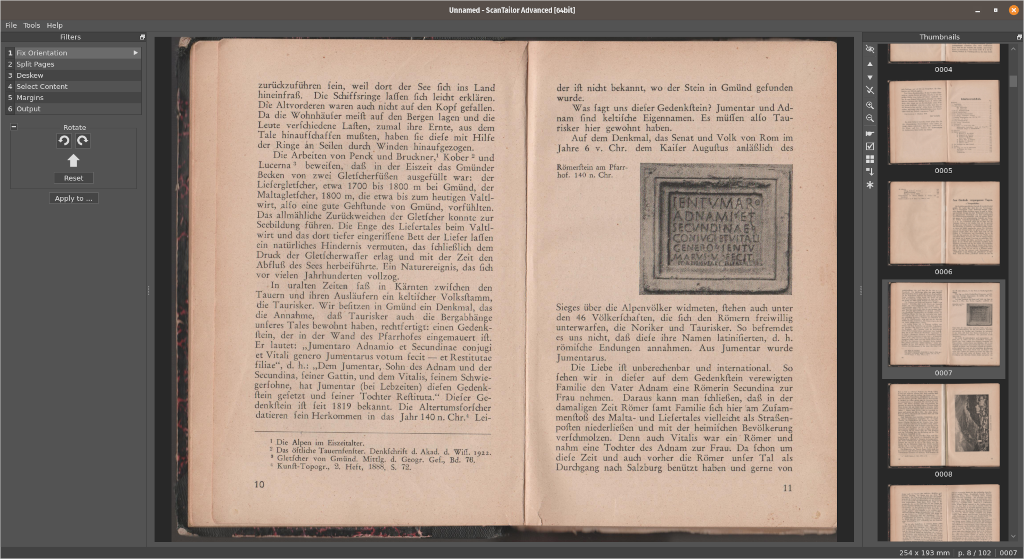

For this I use ScanTailor, a capable open-source tool, which has been abandoned and been picked up by new developers multiple times. Unfortunately, you have to build it yourself, which is a bit of a showstopper for the average user. If you build it then I recommend doing so in a container image or VM to avoid polluting your system with the build dependencies.

It ingests image files for the document’s pages. If you ended up with a PDF after scanning you first have to transform its pages into images. You can use ImageMagick for that. It is important that you know the DPI value of your scan so that you pick the right value. You can downsample but if you pick a larger value than scanned you end up with reduced quality.

$ convert -density 300 -scene 303 scan_2.pdf images/%04d.pngAbove command shows how to use ImageMagick to convert a PDF scan (second part) to PNG images with 4 digits as file name starting with 0303 using 300 dpi. After you obtained the images you can import them into a ScanTailor project.

After importing the images you get the workflow on the left side, the current image in the center and the other images on the right side. In a first step the program can fix the orientation, then split up pages if necessary, deskew them, find the content, remove the rest and add margins and then do output processing. By selecting the arrow on the steps you trigger batch processing. It is always a good idea to inspect the result of the automatic processing and correct it if necessary. Sometimes the deskew could be off or not all of a page’s content might have been detected. You can do manual corrections or adjustments for all steps.

The real magic happens at the “Output” step. There ScanTailor takes the content, removes everything else adds the margins and applies a threshold color for black-white output if instructed to do so. You can configure the threshold value per page if the result has to be optimized. There is also a de-speckling and de-warping function available. De-speckling removes leftover black speckles after the threshold has been applied. De-warping tries to correct warped lines, this is also configurable per page, but I my results with that were not really satisfying in all cases. If there are images on the page ScanTailor can also apply a “mixed mode” where it detects the image and does not apply the threshold to it. It can also adjust the luminosity of the image so that it matches the rest of the page.

Do not forget to adjust the DPI value for the output images to the desired value. The default is 600 dpi but normally you will want to use 300 dpi for smaller file size. ScanTailor outputs the images into an output directory. If a page contains a grayscale image I usually post-process it with an image processing tool like GIMP to de-saturate the page.

PDF creation

After the images have been processed you might want to produce a PDF file out of them. ScanTailor outputs images in TIFF format. For easier post-processing I convert them to PNG, but you can also use TIFF directly. To produce the PDF you can use different tools. You can use ImageMagick again:

$ convert output/*.png output.pdfAs a last step it might be a good idea to convert the produced PDF into a file that adheres to a PDF standard and thus is suitable for long-term preservation. With PDF/A there is such a standard. I have chosen to use PDF/A-2b because of wide adoption. In theory it should be possible to convert a PDF to PDF/A-2b with Ghostscript. Unfortunately, I was not able to produce a PDF with it that also validated correctly. So I resorted to purchasing PDF Studio, a cross-platform PDF editor which is also capable of creating documents according to various standards (also PDF/A-2b) and validating them.

Why doing all this?

The benefit of doing all that post-processing with ScanTailor is a smaller document (for the example 33 MB instead of 100 MB for the raw images) because a lot of unnecessary stuff is removed. There is also more comfort because in the example document the scanned double pages have been split into separate ones. As a bonus step we could do OCR to make the document searchable, which will be covered in other posts.

The result of the example provided can be found under ark:/65325/r20bwr.